A whaley good outcome for Ms Blue

Andy Fontana, technical product specialist at Leica Geosystems and Jess Marley, VAD Senior Supervisor at Halon Entertainment talk us through the process of renovating and digitising a blue whale's skeleton: a groundbreaking project that saw film production tech repurposed to preserve an important environmental artefact.

For decades the Seymour Marine Research Center in California offered a home to 'Ms. Blue', an 84-foot whale skeleton and local icon. But the years and elements weren't kind to the bones leading the Center to look for sustainable ways to preserve it.

Enter Hollywood VFX studio Halon Entertainment which had an idea on how to save Ms. Blue for future generations, starting with a precision LiDAR scan down to the millimeter using a Leica Geosystems scanner. That led to a 3D model which became the base for a series of realistic, animated educational videos and an AR replica – the model was also used to create replacement bones 3D printed from recycled hospital trays.

Can you tell us a little of Ms Blue’s history and how the skeleton ended up at the Seymour Center?

In 1979, Ms.Blue washed ashore on Pescadero Beach in California, and her bones were saved by University of California Santa Cruz faculty, students and staff. The bones were mounted to a steel structure in 1985, and then moved to their current location at the Seymour Marine Discovery Center in 2000.

Can you talk us through how the idea for the project first came about?

I was visiting family up in Santa Cruz, and while I was out on a run I stumbled upon Ms. Blue. After a discussion with my aunt who lived nearby and volunteers at the Seymour Center, she mentioned the bones needed to come down due to safety concerns.

Leica’s LiDAR scanners are being used in everything from VFX to architecture and AEC and more.

I decided to go back early the following morning and scan them with my phone using the RealityScan app. After processing the scan to Sketchfab and seeing the low res scan in augmented reality, the concept was born. We could create a digital double which could replace the current bones in AR, while capturing the high resolution detail with a Leica LiDAR scanner to preserve her for the center. Once we got in touch with the Seymour team, the project took off.

Credits

View on- VFX Halon

- VFX Supervisor Jess Marley

Explore full credits, grab hi-res stills and more on shots Vault

Credits

powered by- VFX Halon

- VFX Supervisor Jess Marley

What other options did the Seymour Marine Discovery Center consider when planning the renovation of the skeleton?

The team at the Seymour Center spoke to a few groups about options on how to preserve the skeleton, but none of them were a fit with Seymour’s sustainability ideology.

The RTC360 laser scanner captured the massive scale and the micro-details – down to the ridges and imperfections in Ms Blue’s bones.

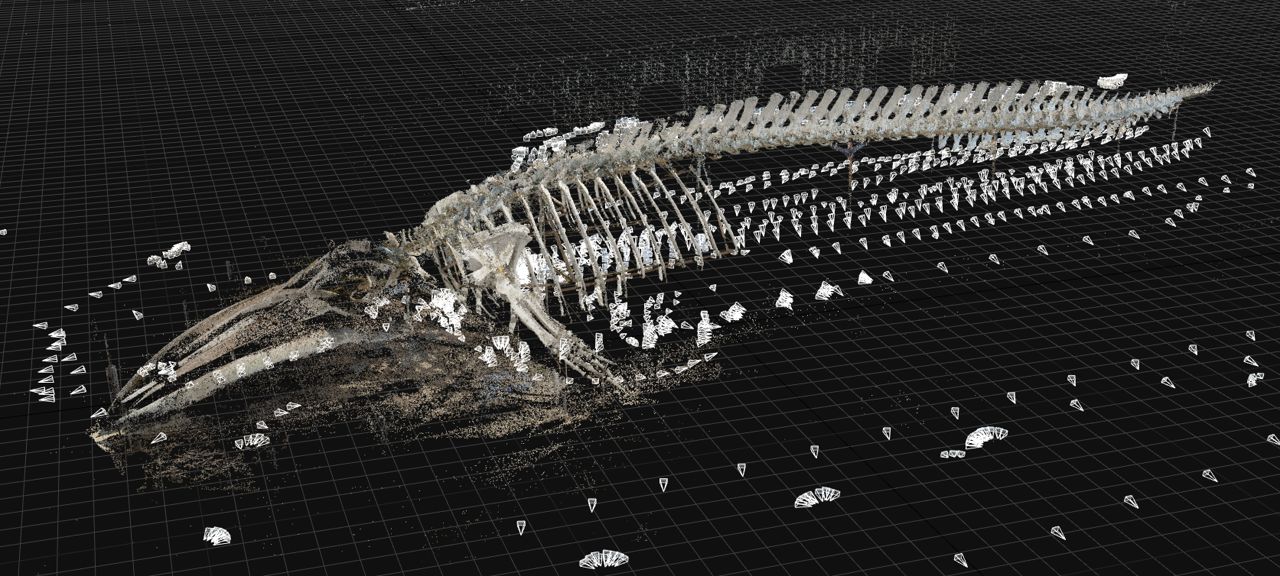

Our photogrammetry team consisting of Patrick Surace and Tripp Topping used LiDAR to scan her without disturbing a single bone, leaving her completely intact. This allowed us to replicate the exact positional data in which she currently sits today.

Above: creating a digital point cloud of Ms Blue's bones.

Can you explain what the Leica Geosystem scanner is and how it made a LiDAR scan of Ms Blue’s bones?

Leica Geosystems offers a few different LiDAR scanners. They can be used by any industry that needs an accurate scan of an area.

They then animated Ms. Blue to move realistically, referencing real blue whale movements in the sea.

The Leica BLK360 is a terrestrial imaging laser scanner that takes full scans of any indoor and outdoor spaces, buildings and structures with spherical images in twenty seconds. It captures accurate and reliable data of spaces and structures for a wide variety of users – Leica’s LiDAR scanners are being used in everything from VFX to architecture and AEC and more.

The scans from the BLK360 enables users to utilise the data in myriad ways (meshes, point clouds, digital measurements, flythroughs) for their specified workflows.

This project is a blueprint for what's possible when high-end visualisation technologies are used for preservation and conservation.

With the Leica RTC360 laser scanner, the Halon team captured more than 50 detailed scans of Ms. Blue in one day. The RTC360 offers a high-speed, non-contact solution that can capture the massive scale and the micro-details (down to the ridges and imperfections in the bones), regardless of the changing lighting conditions. It allowed the Halon team to capture the entire skeleton with millimeter-level precision, without having to worry about the quality of the data or gaps in the coverage. This enabled the Halon team to do what they do best and focus on creative storytelling.

We’ve already seen this trend beginning to take shape in different forms. During Covid, for instance, when travel was limited we saw lots of places scanned and made available to the public.

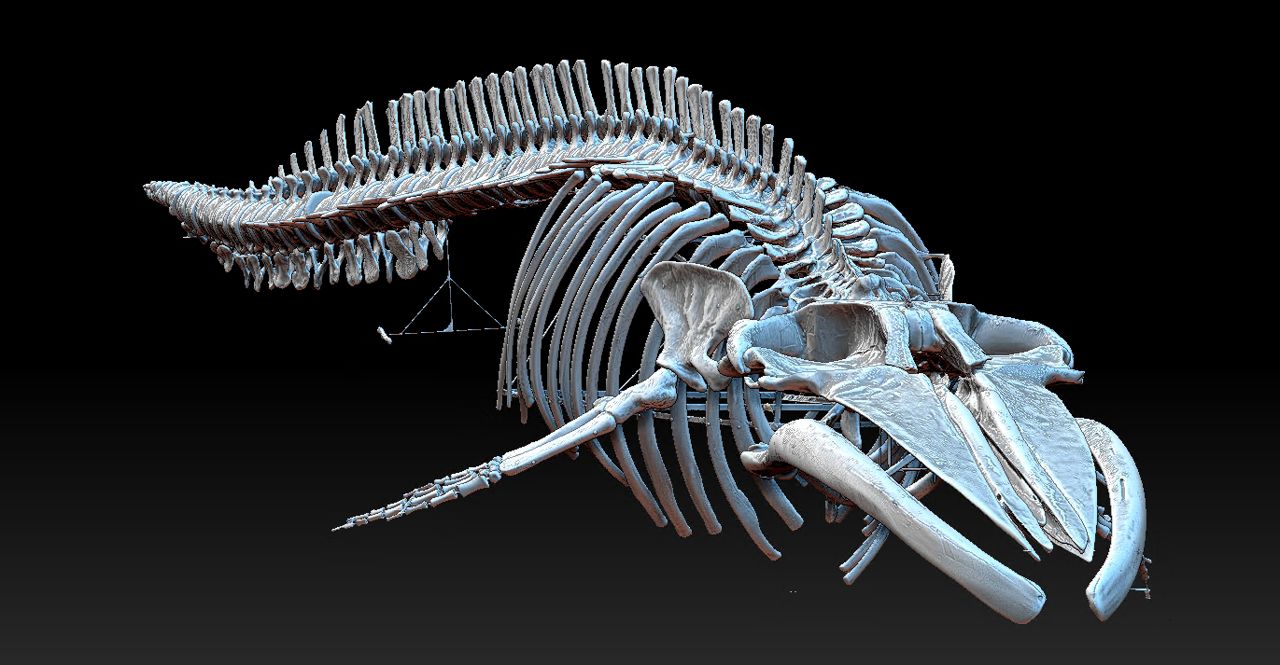

Using that scan data, Halon was able to create an ultra-realistic mesh of the skeleton. They then animated Ms. Blue to move realistically, referencing real blue whale movements in the sea and building custom animations in Unity.

Above: The skeleton was first displayed at the Seymour Marine Discovery Center in 2000 and became a popular local icon.

Once the 3D model of the skeleton had been built what was the next stage; how did Halon set about rebuilding the rest of the whale?

Once Ms. Blue was scanned and meshed, we cleaned up the model, detailed the texture and reoriented bones that had become deformed over the years. We used Reality Capture to mesh the bones, then Zbrush to add some of the details lost due to years of exposure to the elements. We then cleaned up any anomalies.

Where VR seems to have been slowly trailing off, AR is available in everyone's pockets, and in many classrooms today.

Since we were also creating a skinned, or ‘live’ version of Ms. Blue for the app, we worked closely with the Seymour Center team to create an accurate anatomical model of a living blue whale. After constructing high res models, it was a dance between Zbrush and Maya to create a lower resolution ‘cage’ mesh – similar to game models which are optimised for real-time.

This mesh matches and lines up to the high resolution form, but is significantly reduced in terms of polycount, which is required for use in AR. The final step involved using Substance Painter to bake the high resolution geometry to a low resolution cage, and apply the photogrammetry colour information onto her for a final texture and cleaner paint pass.

Above: Reality Capture was used to mesh the bones, then Zbrush was one of the programmes used to 'flesh out' Ms Blue.

Can you talk us through how the model was fed into game engine Unity to create interactive animations?

We rigged and animated Ms. Blue with cameras inside of Maya, then exported the models and animation over to the development team: Cavylabs. With our background in film, games and cinematics, as well as being early adopters of Unreal Engine, we understand the general workflow. But the folks over at Cavylabs helped us to implement the augmented reality techniques, and used their expertise to fine tune the playback inside of Unity. A true team effort.

We've seen wars, weather, and many other external factors ruin relics of the past, the use case for this technology should become a regular path forward.

Can you expand on the next phase, the repairing of the actual analogue skeleton using Swellcycle 3D printers and shrimp paste putty?!

Our job was mainly to prepare and hand off the high resolution models to swellcycle for 3D printing. That process involved exporting the cleaned up, high resolution geometry from Zbrush, and then processing meshes through Autodesk’s CAD Fusion; the results were water tight, printable assets that could be printed at life-size scales.

Above: The Halon team's many detailed scans of Ms. Blue's bones helped create an accurate digital skeleton.

Do you think using digital replicas of precious artefacts in this way will become the standard as sustainability becomes more pressing?

This project is a blueprint for what's possible when high-end visualization technologies are used for preservation and conservation. As reality capture and visualization technologies become more accessible, we'll see more museums and research centers partnering with artists and VFX teams to digitally preserve history and create innovative educational tools.

The ability to use the techniques that are standard in the VFX community, paired with the knowledge from the science community can make beautiful experiences for folks of all ages

This project of digitising a blue whale skeleton exemplifies how VFX technologies developed for film have been adapted for use in other initiatives.

I think we’ve already seen this trend beginning to take shape, but in different forms. During Covid, for instance, when travel was limited we saw lots of places scanned and made available to the public for educational purposes. Augmented reality has taken a new form with the release of the Apple Vision Pro as well. Where VR seems to have been slowly trailing off, AR is available in everyone's pockets, and in many classrooms today. AR is making education fun and giving people a new way to experience places and things they wouldn’t usually have access to.

It's a lovely time to be alive!

We've seen wars, weather, and many other external factors ruin relics of the past, the use case for this technology should become a regular path forward. The ability to use the techniques that are standard in the VFX community, paired with the knowledge from the science community can make beautiful experiences for folks of all ages. It's a lovely time to be alive!

)